Future-proof ethics

Ethics based on "common sense" seems to have a horrible track record.

That is: simply going with our intuitions and societal norms has, in the past, meant endorsing all kinds of insanity. To quote an article by Kwame Anthony Appiah:

Once, pretty much everywhere, beating your wife and children was regarded as a father's duty, homosexuality was a hanging offense, and waterboarding was approved -- in fact, invented -- by the Catholic Church. Through the middle of the 19th century, the United States and other nations in the Americas condoned plantation slavery. Many of our grandparents were born in states where women were forbidden to vote. And well into the 20th century, lynch mobs in this country stripped, tortured, hanged and burned human beings at picnics.

Looking back at such horrors, it is easy to ask: What were people thinking?

Yet, the chances are that our own descendants will ask the same question, with the same incomprehension, about some of our practices today.

Is there a way to guess which ones?

This post kicks off a series on the approach to ethics that I think gives us our best chance to be "ahead of the curve:" consistently making ethical decisions that look better, with hindsight after a great deal of moral progress, than what our peer-trained intuitions tell us to do.

"Moral progress" here refers to both societal progress and personal progress. I expect some readers will be very motivated by something like "Making ethical decisions that I will later approve of, after I've done more thinking and learning," while others will be more motivated by something like "Making ethical decisions that future generations won't find abhorrent." (More on "moral progress" in this follow-up piece.)

Being "future-proof" isn't necessarily the end-all be-all of an ethical system. I tend to "compromise" between the ethics I'll be describing here - which is ambitious, theoretical, and radical - and more "common-sense"/intuitive approaches to ethics that are more anchored to conventional wisdom and the "water I swim in."

But if I simply didn't engage in philosophy at all, and didn't try to understand and incorporate "future-proof ethics" into my thinking, I think that would be a big mistake - one that would lead to a lot of other moral mistakes, at least from the perspective of a possible future world (or a possible Holden) that has seen a lot of moral progress.

Indeed, I think some of the best opportunities to do good in the world come from working on issues that aren't yet widely recognized as huge moral issues of our time.

For this reason, I think the state of "future-proof ethics" is among the most important topics out there, especially for people interested in making a positive difference to the world on very long timescales. Understanding this topic can also make it easier to see where some of the unusual views about ethics in the effective altruism community come from: that we should more highly prioritize the welfare of animals, potentially even insects, and most of all, future generations.

With that said, some of my thinking on this topic can get somewhat deep into the weeds of philosophy. So I am putting up a lot of the underlying content for this series on the EA Forum alone, and the pieces that appear on Cold Takes will try to stick to the high-level points and big picture.

Outline of the rest of this piece:

- Most people's default approach to ethics seems to rely on "common sense"/intuitions influenced by peers. If we want to be "ahead of the curve," we probably need a different approach. More

- The most credible candidate for a future-proof ethical system, to my knowledge, rests on three basic pillars:

- Systemization: seeking an ethical system based on consistently applying fundamental principles, rather than handling each decision with case-specific intuitions. More

- Thin utilitarianism: prioritizing the "greatest good for the greatest number," while not necessarily buying into all the views traditionally associated with utilitarianism. More

- Sentientism: counting anyone or anything with the capacity for pleasure and suffering - whether an animal, a reinforcement learner (a type of AI), etc. - as a "person" for ethical purposes. More

- Combining these three pillars yields a number of unusual, even uncomfortable views about ethics. I feel this discomfort and don't unreservedly endorse this approach to ethics. But I do find it powerful and intriguing. More

- An appendix explains why I think other well-known ethical theories don't provide the same "future-proof" hopes; another appendix notes some debates about utilitarianism that I am not engaging in here.

Later in this series, I will:

- Use a series of dialogues to illustrate how specific, unusual ethical views fit into the "future-proof" aspiration.

- Summarize what I see as the biggest weaknesses of "future-proof ethics."

- Discuss how to compromise between "future-proof ethics" and "common-sense" ethics, drawing on the nascent literature about "moral uncertainty."

"Common-sense" ethics

For a sense of what I mean by a "common-sense" or "intuitive" approach to ethics, see this passage from a recent article on conservatism:

Rationalists put a lot of faith in “I think therefore I am”—the autonomous individual deconstructing problems step by logical step. Conservatives put a lot of faith in the latent wisdom that is passed down by generations, cultures, families, and institutions, and that shows up as a set of quick and ready intuitions about what to do in any situation. Brits don’t have to think about what to do at a crowded bus stop. They form a queue, guided by the cultural practices they have inherited ...

In the right circumstances, people are motivated by the positive moral emotions—especially sympathy and benevolence, but also admiration, patriotism, charity, and loyalty. These moral sentiments move you to be outraged by cruelty, to care for your neighbor, to feel proper affection for your imperfect country. They motivate you to do the right thing.

Your emotions can be trusted, the conservative believes, when they are cultivated rightly. “Reason is, and ought only to be the slave of the passions,” David Hume wrote in his Treatise of Human Nature. “The feelings on which people act are often superior to the arguments they employ,” the late neoconservative scholar James Q. Wilson wrote in The Moral Sense.

The key phrase, of course, is cultivated rightly. A person who lived in a state of nature would be an unrecognizable creature ... If a person has not been trained by a community to tame [their] passions from within, then the state would have to continuously control [them] from without.

I'm not sure "conservative" is the best descriptor for this general attitude toward ethics. My sense is that most people's default approach to ethics - including many people for whom "conservative" is the last label they'd want - has a lot in common with the above vision. Specifically: rather than picking some particular framework from academic philosophy such as "consequentialism," "deontology" or "virtue ethics," most people have an instinctive sense of right and wrong, which is "cultivated" by those around them. Their ethical intuitions can be swayed by specific arguments, but they're usually not aiming to have a complete or consistent ethical system.

As remarked above, this "common sense" (or perhaps more precisely, "peer-cultivated intuitions") approach has gone badly wrong many times in the past. Today's peer-cultivated intuitions are different from the past's, but as long as that's the basic method for deciding what's right, it seems one has the same basic risk of over-anchoring to "what's normal and broadly accepted now," and not much hope of being "ahead of the curve" relative to one's peers.

Most writings on philosophy are about comparing different "systems" or "frameworks" for ethics (e.g., consequentialism vs. deontology vs. virtue ethics). By contrast, this series focuses on the comparison between non-systematic, "common-sense" ethics and an alternative approach that aims to be more "future-proof," at the cost of departing more from common sense.

Three pillars of future-proof ethics

Systemization

We're looking for a way of deciding what's right and wrong that doesn't just come down to "X feels intuitively right" and "Y feels intuitively wrong." Systemization means: instead of judging each case individually, look for a small set of principles that we deeply believe in, and derive everything else from those.

Why would this help with "future-proofing"?

One way of putting it might be that:

(A) Our ethical intuitions are sometimes "good" but sometimes "distorted" by e.g. biases toward helping people like us, or inability to process everything going on in a complex situation.

(B) If we derive our views from a small number of intuitions, we can give these intuitions a lot of serious examination, and pick ones that seem unusually unlikely to be "distorted."

(C) Analogies to science and law also provide some case for systemization. Science seeks "truth" via systemization and law seeks "fairness" via systemization; these are both arguably analogous to what we are trying to do with future-proof ethics.

A bit more detail on (A)-(C) follows.

(A) Our ethical intuitions are sometimes "good" but sometimes "distorted." Distortions might include:

- When our ethics are pulled toward what’s convenient for us to believe. For example, that one’s own nation/race/sex is superior to others, and that others’ interests can therefore be ignored or dismissed.

- When our ethics are pulled toward what’s fashionable and conventional in our community (which could be driven by others’ self-serving thinking).

- When we're instinctively repulsed by someone for any number of reasons, including that they’re just different from us, and we confuse this for intuitions that what they’re doing is wrong. For example, consider the large amount of historical and present intolerance for unconventional sexuality, gender identity, etc.

- When our intuitions become "confused" because they're fundamentally not good at dealing with complex situations. For example, we might have very poor intuitions about the impact of some policy change on the economy, and end up making judgments about such a policy in pretty random ways - like imagining a single person who would be harmed or helped by a policy.

It's very debatable what it means for an ethical view to be "not distorted." Some people (“moral realists”) believe that there are literal ethical “truths,” while others (what I might call “moral quasi-realists,” including myself) believe that we are simply trying to find patterns in what ethical principles we would embrace if we were more thoughtful, informed, etc. But either way, the basic thinking is that some of our ethical intuitions are more reliable than others - more "really about what is right" and less tied to the prejudices of our time.

(B) If we derive our views from a small number of intuitions, we can give these intuitions a lot of serious examination, and pick ones that seem unusually unlikely to be "distorted."

The below sections will present two ideas - thin utilitarianism and sentientism - that:

- Have been subject to a lot of reflection and debate.

- Can be argued for based on very general principles about what it means for an action to be ethical. Different people will see different levels of appeal in these principles, but they do seem unusually unlikely to be contingent on conventions of our time.

- Can be used (together) to derive a large number of views about specific ethical decisions.

(C) Analogies to science and law also provide some case for systemization.

Analogy to science. In science, it seems to be historically the case that aiming for a small, simple set of principles that generates lots of specific predictions has been a good rule,1 and an especially good way to be "ahead of the curve" in being able to understand things about the world.

For example, if you’re trying to predict when and how fast objects will fall, you can probably make pretty good gut-based guesses about relatively familiar situations (a rock thrown in the water, a vase knocked off a desk). But knowing the law of gravitation - a relatively simple equation that explains a lot of different phenomena - allows much more reliable predictions, especially about unfamiliar situations.

Analogy to law. Legal systems tend to aim for explicitness and consistency. Rather than asking judges to simply listen to both sides and "do what feels right," legal systems tend to encourage being guided by a single set of rules, written down such that anyone can read it, applied as consistently as possible. This practice may increase the role of principles that have gotten lots of attention and debate, and decrease the role of judges' biases, moods, personal interests, etc.

Systemization can be weird. It’s important to understand from the get-go that seeking an ethics based on “deep truth” rather than conventions of the time means we might end up with some very strange, initially uncomfortable-feeling ethical views. The rest of this series will present such uncomfortable-feeling views, and I think it’s important to process them with a spirit of “This sounds wild, but if I don’t want to be stuck with my raw intuitions and the standards of my time, I should seriously consider that this is where a more deeply true ethical system will end up taking me.”

Next I'll go through two principles that, together, can be the basis of a lot of systemization: thin utilitarianism and sentientism.

Thin Utilitarianism

I think one of the more remarkable, and unintuitive, findings in philosophy of ethics comes not from any philosopher but from the economist John Harsanyi. In a nutshell:

- Let’s start with a basic, appealing-seeming principle for ethics: that it should be other-centered. That is, my ethical system should be based as much as possible on the needs and wants of others, rather than on my personal preferences and personal goals.

- What I think Harsanyi’s work essentially shows is that if you’re determined to have an other-centered ethics, it pretty strongly looks like you should follow some form of utilitarianism, an ethical system based on the idea that we should (roughly speaking) always prioritize the greatest good for the greatest number of (ethically relevant) beings.

- There are many forms of utilitarianism, which can lead to a variety of different approaches to ethics in practice. However, an inescapable property of all of them (by Harsanyi’s logic) is the need for consistent “ethical weights” by which any two benefits or harms can be compared.

- For example, let’s say we are comparing two possible ways in which one might do good: (a) saving a child from drowning in a pond, or (b) helping a different child to get an education.

- Many people would be tempted to say you “can’t compare” these, or can’t choose between them. But according to utilitarianism, either (a) is exactly as valuable as (b), or it’s half as valuable (meaning that saving two children from drowning is as good as helping one child get an education), or it’s twice as valuable … or 100x as valuable, or 1/100 as valuable, but there has to be some consistent multiplier.

- And that, in turn, implies that for any two ways you can do good - even if one is very large (e.g. saving a life) and one very small (e.g. helping someone avoid a dust speck in their eye) - there is some number N such that N of the smaller benefit is more valuable than the larger benefit. In theory, any harm can be outweighed by something that benefits a large enough number of persons, even if it benefits them in a minor way.

The connections between these points - the steps by which one moves from “I want my ethics to focus on the needs and wants of others” to “I must use consistent moral weights, with all of the strange implications that involves” - is fairly complex, and I haven’t found a compact way of laying it out. I discuss it in detail in an Effective Altruism Forum post: Other-centered ethics and Harsanyi's Aggregation Theorem. I will also try to give a bit more of an intuition for it in the next piece.

I'm using the term thin utilitarianism to point at a minimal version of utilitarianism that only accepts what I've outlined above: a commitment to consistent ethical weights, and a belief that any harm can be outweighed by a large enough number of minor benefits. There are a lot of other ideas commonly associated with utilitarianism that I don't mean to take on board here, particularly:

- The "hedonist" theory of well-being: that "helping someone" is reducible to "increasing someone's positive conscious experiences relative to negative conscious experiences." (Sentientism, discussed below, is a related but not identical idea.2)

- An "ends justify the means" attitude.

- There are a variety of ways one can argue against "ends-justify-the-means" style reasoning, even while committing to utilitarianism (here's one).

- In general, I'm committed to some non-utilitarian personal codes of ethics, such as (to simplify) "deceiving people is bad" and "keeping my word is good." I'm only interested in applying utilitarianism within particular domains (such as "where should I donate?") where it doesn't challenge these codes.

- (This applies to "future-proof ethics" generally, but I am noting it here in particular because I want to flag that my arguments for "utilitarianism" are not arguments for "the ends justify the means.")

- More on "thin utilitarianism" at my EA Forum piece.

Sentientism

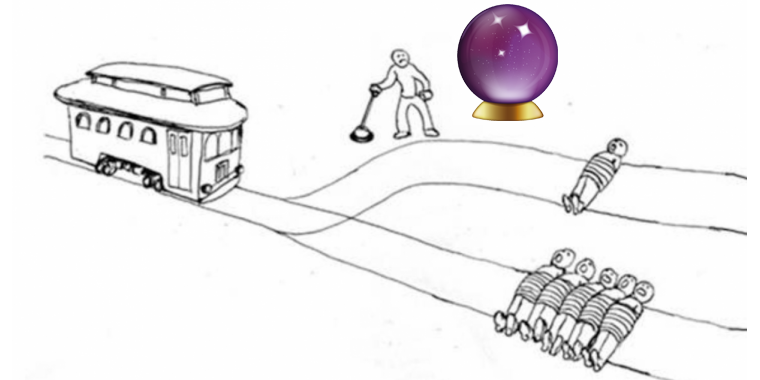

To the extent moral progress has occurred, a lot of it seems to have been about “expanding the moral circle”: coming to recognize the rights of people who had previously been treated as though their interests didn’t matter.

In The Expanding Circle, Peter Singer gives a striking discussion (see footnote)3 of how ancient Greeks seemed to dismiss/ignore the rights of people from neighboring city-states. More recently, people in power have often seemed to dismiss/ignore the rights of people from other nations, people with other ethnicities, and women and children (see quote above). These now look like among the biggest moral mistakes in history.

Is there a way, today, to expand the circle all the way out as far as it should go? To articulate simple, fundamental principles that give us a complete guide to “who counts” as a person, such that we need to weigh their interests appropriately?

Sentientism is the main candidate I’m aware of for this goal. The idea is to focus on the capacity for pleasure and suffering (“sentience”): if you can experience pleasure and suffering, you count as a “person” for ethical purposes, even if you’re a farm animal or a digital person or a reinforcement learner.

Key quote from 18th-century philosopher Jeremy Bentham: "The question is not, Can they reason?, nor Can they talk? but, Can they suffer? Why should the law refuse its protection to any sensitive being?"

A variation on sentientism would be to say that you count as a "person" if you experience "conscious" mental states at all.4 I don't know of a simple name for this idea, and for now I'm lumping it in with sentientism, as it is pretty similar for my purposes throughout this series.

Sentientism potentially represents a simple, fundamental principle (“the capacity for pleasure and suffering is what matters”) that can be used to generate a detailed guide to who counts ethically, and how much (in other words, what ethical weight should be given to their interests). Sentientism implies caring about all humans, regardless of sex, gender, ethnicity, nationality, etc., as well as potentially about animals, extraterrestrials, and others.

Putting the pieces together

Combining systemization, thin utilitarianism and sentientism results in an ethical attitude something like this:

- I want my ethics to be a consistent system derived from robust principles. When I notice a seeming contradiction between different ethical views of mine, this is a major problem.

- A good principle is that ethics should be about the needs and wants of others, rather than my personal preferences and personal goals. This ends up meaning that I need to judge every action by who benefits and who is harmed, and I need consistent “ethical weights” for weighing different benefits/harms against each other.

- When deciding how to weigh someone’s interests, the key question is the extent to which they’re sentient: capable of experiencing pleasure and suffering.

- Combining these principles can generate a lot of familiar ethical conclusions, such as “Don’t accept a major harm to someone for a minor benefit to someone else,” “Seek to redistribute wealth from people with more to people with less, since the latter benefit more,” and “Work toward a world with less suffering in it.”

- It also generates some stranger-seeming conclusions, such as: “Animals may have significant capacity for pleasure and suffering, so I should assign a reasonably high ‘ethical weight’ to them. And since billions of animals are being horribly treated on factory farms, the value of reducing harm from factory farming could be enormous - to the point where it could be more important than many other issues that feel intuitively more compelling.”

- The strange conclusions feel uncomfortable, but when I try to examine why they feel uncomfortable, I worry that a lot of my reasons just come down to “avoiding weirdness” or “hesitating to care a great deal about creatures very different from me and my social peers.” These are exactly the sorts of thoughts I’m trying to get away from, if I want to be ahead of the curve on ethics.

An interesting additional point is that this sort of ethics arguably has a track record of being "ahead of the curve." For example, here's Wikipedia on Jeremy Bentham, the “father of utilitarianism” (and a major sentientism proponent as well):

He advocated individual and economic freedom, the separation of church and state, freedom of expression, equal rights for women, the right to divorce, and the decriminalizing of homosexual acts. [My note: he lived from 1747-1832, well before most of these views were common.] He called for the abolition of slavery, the abolition of the death penalty, and the abolition of physical punishment, including that of children. He has also become known in recent years as an early advocate of animal rights.5

More on this at utilitarianism.net, and some criticism (which I don't find very compelling,6 though I have my own reservations about the "track record" point that I'll share in future pieces) here.

To reiterate, I don’t unreservedly endorse the ethical system discussed in this piece. Future pieces will discuss weaknesses in the case, and how I handle uncertainty and reservations about ethical systems.

But it’s a way of thinking that I find powerful and intriguing. When I act dramatically out of line with what the ethical system I've outlined suggests, I do worry that I’m falling prey to acting by the ethics of my time, rather than doing the right thing in a deeper sense.

Appendix: other candidates for future-proof ethics?

In this piece, I’ve mostly contrasted two approaches to ethics:

- "Common sense" or intuition-based ethics.

- The specific ethical framework that combines systemization, thin utilitarianism and sentientism.

Of course, these aren't the only two options. There are a number of other approaches to ethics that have been extensively explored and discussed within academic philosophy. These include deontology, virtue ethics and contractualism.

These approaches and others have significant merits and uses. They can help one see ethical dilemmas in a new light, they can help illustrate some of the unappealing aspects of utilitarianism, they can be combined with utilitarianism so that one avoids particular bad behaviors, and they can provide potential explanations for some particular ethical intuitions.

But I don’t think any of them are as close to being comprehensive systems - able to give guidance on practically any ethics-related decision - as the approach I've outlined above. As such, I think they don’t offer the same hopes as the approach I've laid out in this post.

One key point is that other ethical frameworks are often concerned with duties, obligations and/or “rules,” and they have little to say about questions such as “If I’m choosing between a huge number of different worthy places to donate, or a huge number of different ways to spend my time to help others, how do I determine which option will do as much good as possible?”

The approach I've outlined above seems like the main reasonably-well-developed candidate system for answering questions like the latter, which I think helps explain why it seems to be the most-attended-to ethical framework in the effective altruism community.

Appendix: aspects of the utilitarianism debate I'm skipping

Most existing writing on utilitarianism and/or sentientism is academic philosophy work. In academic philosophy, it's generally taken as a default that people are searching for some coherent ethical system; the "common-sense or non-principle-derived approach" generally doesn't take center stage (though there is some discussion of it under the heading of moral particularism).

With this in mind, a number of common arguments for utilitarianism don't seem germane for my purposes, in particular:

- A broad suite of arguments of the form, "Utilitarianism seems superior to particular alternatives such as deontology or virtue ethics." In academic philosophy, people often seem to assume that a conclusion like "Utilitarianism isn't perfect, but it's the best candidate for a consistent, principled system we have" is a strong argument for utilitarianism; here, I am partly examining what we gain (and lose) by aiming for a consistent, principled system at all.

- Arguments of the form, "Utilitarianism is intuitively and/or obviously correct; it seems clear that pleasure is good and pain is bad, and much follows from this." While these arguments might be compelling to some, it seems clear that many people don't share the implied view of what's "intuitive/obvious." Personally, I would feel quite uncomfortable making big decisions based on an ethical system whose greatest strength is something like "It just seems right to me [and not to many others]," and I'm more interested in arguments that utilitarianism (and sentientism) should be followed even where they are causing significant conflict with one's intuitions.

In examining the case for utilitarianism and sentientism, I've left arguments in the above categories to the side. But if there are arguments I've neglected in favor of utilitarianism and sentientism that fit the frame of this series, please share them in the comments!

Next in series: Defending One-Dimensional Ethics

Footnotes

-

I don't have a cite for these being the key properties of a good scientific theory, but I think these properties tend to be consistently sought out across a wide variety of scientific domains. The simplicity criterion is often called "Occam's razor," and the other criterion is hopefully somewhat self-explanatory. You could also see these properties as essentially a plain-language description of Solomonoff induction. ↩

-

It's possible to combine sentientism with a non-hedonist theory of well-being. For example, one might believe that only beings with the capacity for pleasure and suffering matter, but also that once we've determined that someone matters, we should care about what they want, not just about their pleasure and suffering. ↩

-

↩At first [the] insider/ outsider distinction applied even between the citizens of neighboring Greek city-states; thus there is a tombstone of the mid-fifth century B.C. which reads:

This memorial is set over the body of a very good man. Pythion, from Megara, slew seven men and broke off seven spear points in their bodies … This man, who saved three Athenian regiments … having brought sorrow to no one among all men who dwell on earth, went down to the underworld felicitated in the eyes of all.

This is quite consistent with the comic way in which Aristophanes treats the starvation of the Greek enemies of the Athenians, starvation which resulted from the devastation the Athenians had themselves inflicted. Plato, however, suggested an advance on this morality: he argued that Greeks should not, in war, enslave other Greeks, lay waste their lands or raze their houses; they should do these things only to non-Greeks. These examples could be multiplied almost indefinitely. The ancient Assyrian kings boastfully recorded in stone how they had tortured their non-Assyrian enemies and covered the valleys and mountains with their corpses. Romans looked on barbarians as beings who could be captured like animals for use as slaves or made to entertain the crowds by killing each other in the Colosseum. In modern times Europeans have stopped treating each other in this way, but less than two hundred years ago some still regarded Africans as outside the bounds of ethics, and therefore a resource which should be harvested and put to useful work. Similarly Australian aborigines were, to many early settlers from England, a kind of pest, to be hunted and killed whenever they proved troublesome.

-

E.g., https://www.openphilanthropy.org/2017-report-consciousness-and-moral-patienthood#ProposedCriteria ↩

-

I mean, I agree with the critic that the "track record" point is far from a slam dunk, and that "utilitarians were ahead of the curve" doesn't necessarily mean "utilitarianism was ahead of the curve." But I don't think the "track record" argument is intended to be a philosophically tight point; I think it's intended to be interesting and suggestive, and I think it succeeds at that. At a minimum, it may imply something like "The kind of person who is drawn to utilitarianism+sentientism is also the kind of person who makes ahead-of-the-curve moral judgments," and I'd consider that an argument for putting serious weight on the moral judgments of people who drawn to utilitarianism+sentientism today. ↩